Artificial intelligence: how to address ESG concerns

Photo by Markus Winkler on Unsplash

“It seems probable that once the machine thinking method had started, it would not take long to outstrip our feeble powers. There would be no question of the machines dying, and they would be able to converse with each other to sharpen their wits. At some stage therefore, we should have to expect the machines to take control.”

– Alan M. Turing, 1951 lecture “Intelligent Machinery, A Heretical Theory”.

The latest rage in tech is chat bots powered by artificial intelligence (AI). Tech companies are scrambling to develop ever more capable machines and making efforts to popularize them. However, outside of the immediate spotlight on AI, technology with large data processing capabilities driven by powerful algorithms[1] is nothing new.

What is new is the growing acknowledgment that the seemingly limitless opportunities these technologies bring also carry ESG-risks and impacts related to environmental, social and governance (ESG) aspects. This blog post highlights concerns with AI technology that need to be addressed appropriately; it suggests questions that banks, investors, and AI users should be asking; and it outlines what to expect in terms of regulatory responses.

AI defined

Artificial intelligence is an umbrella term that includes machine learning, deep learning, and natural language processing. AI relies on extensive computing power and massive amounts of data processed by algorithms. AI systems have the capacity to process data and information in a way that resembles intelligent behavior, and typically includes aspects of reasoning, learning, perception, prediction, planning, or control.

Applying AI

The technology is being embedded in the day-to-day activities of organizations in many different sectors, including financial services, health care, and education, but also in the police and judicial services.[2] Some examples of practical applications include:

- Financial services: facial and voice recognition for account access, portfolio optimization and investment advice, credit risk assessment, document management and claims processing, human resources services, and fraud detection.

- Health care: automated scheduling, improved data flow, patient care personalization, targeted diagnostics, pattern identification, and research and development.

- Education: administrative support, teaching support (assessments, practice, personalized tutoring, and feedback), learning support (recommending courses and relevant texts), research support (recommending articles, building models).

- Police: video surveillance and smart cameras, facial recognition, automated urban tolling (e.g., access to inner-city emissions zones), and analyzing crime patterns and making predictions.

- Legal services: legal analytics (assessing whether to take a case, calculating the probability of an outcome, generating a rough draft of a verdict or settlement), identifying precedent cases, and analyzing a judge’s record of decisions or a law firm’s prior litigation history.

Risks and impacts

The data analysis and logic capabilities of AI are immensely powerful, but the technology has demonstrated severe weaknesses in supporting societal values such as fairness, justice, and equity. Making sense of human reality? Understanding causality and cultural nuances? At present, AI’s ability on these fronts is inadequate.

AI reflects the humans who create it. This means our biases and prejudices, and the subjective experiences of developers, can inadvertently be built into algorithms and models and, ultimately, the results generated. Here are just a few risks that have been pointed out:

- Algorithmic bias: the use of algorithm-supported decision-making in financial services, health care, or education may deliver outputs that unintentionally lead to decisions that adversely affect groups based on characteristics such as ethnicity, gender, sexual orientation, religion, criminal history, family status, etc.

- Human rights violations: in law enforcement, facial recognition technologies and other forms of surveillance carry potential for human rights violations, which range from violations of the right to privacy and free expression of all citizens to the systematic curtailing of civil and political rights of political opposition figures or human rights defenders.

- Environmental damage: AI depends on hardware comprised of metals and minerals whose extraction may cause environmental damage and affect the human rights of workers and their communities; powerful computing is fueled by high amounts of electricity, which may have significant climate impacts.

Mitigation

Under the UN Guiding Principles on Business and Human Rights, the OECD Guidelines for Multinational Enterprises, more generally, and the proposed EU Corporate Sustainability Due Diligence Directive, companies and investors are obliged to avoid causing or contributing to adverse impacts on human rights and the environment, and they are obliged to avoid being linked to such impacts through business relationships (which includes financial investments).

Not only the developers of AI solutions, but — importantly — also the users of AI technologies are well advised to engage their risk management and due diligence procedures to analyze the risks and adverse impacts of deploying and/or applying AI functionalities.

Research within ECOFACT’s Monitoring Peer Policies has so far not identified any noteworthy integration of AI risks and impacts into the policies and commitments of financial services companies, with the exception of the topic of “surveillance” in some human rights policies.

Principles for responsible use

Standards for “responsible AI,” which serve to proactively minimize such risks and impacts, have started to take shape. At the international level, key recommendations and principles include the OECD Principles on Artificial Intelligence (May 2019), the G20 Ministerial Statement on Trade and Digital Economy (G20 AI Principles) (May 2019), as well as the UNESCO Recommendations on the Ethics of AI (adopted by 193 countries in November 2021).

The most important principles common to these recommendations include:

- Proportionality and do no harm: the use of AI systems should be justified in the following ways:

- The AI method chosen should be appropriate and proportionate to achieve a given legitimate aim;

- The AI method chosen must not violate or abuse human rights;

- The AI method should be appropriate to the context and be based on rigorous scientific foundations; and

- In scenarios where decisions could have an irreversible impact or may involve life and death decisions, final human determination should apply.

- Accountability: there should be clear roles and responsibilities allocated for overseeing AI integration and monitoring, and an emphasis on human judgment, review, and intervention in model development and (final) decision-making.

- Transparency:

- Make clear how AI algorithms arrive at their outcomes;

- Document AI development, processes (including decision-making), and data sets; and

- Externally disclose (i) all uses of AI-driven decisions, (ii) data used to make AI-driven decisions and how the data affects the decisions, and (iii) consequences of AI-driven decisions.

- Fairness: prevent and address biases in AI algorithms that could lead to discriminatory outcomes. Here are a few examples:

- Require developer companies to have a code of conduct/ethics that promotes non-discriminatory practices;

- Actively seek diversity and wide representation in the input data;

- Carefully review training and validation data used to teach AI models and inform AI process, and;

- Embed rules into AI models that prevent discrimination.

- Sustainability: AI technologies can either benefit sustainability objectives or hinder their realization, depending on how they are applied. AI technologies should therefore be assessed for their human, social, cultural, economic, and environmental benefits and adverse impacts.

- Ethics: ensure that AI use will not exploit or harm customers, either through biases and discrimination or through illegally obtained information; ensure privacy and data protection, non-discrimination and equality, diversity, inclusion, and social justice are prioritized.

Regulation

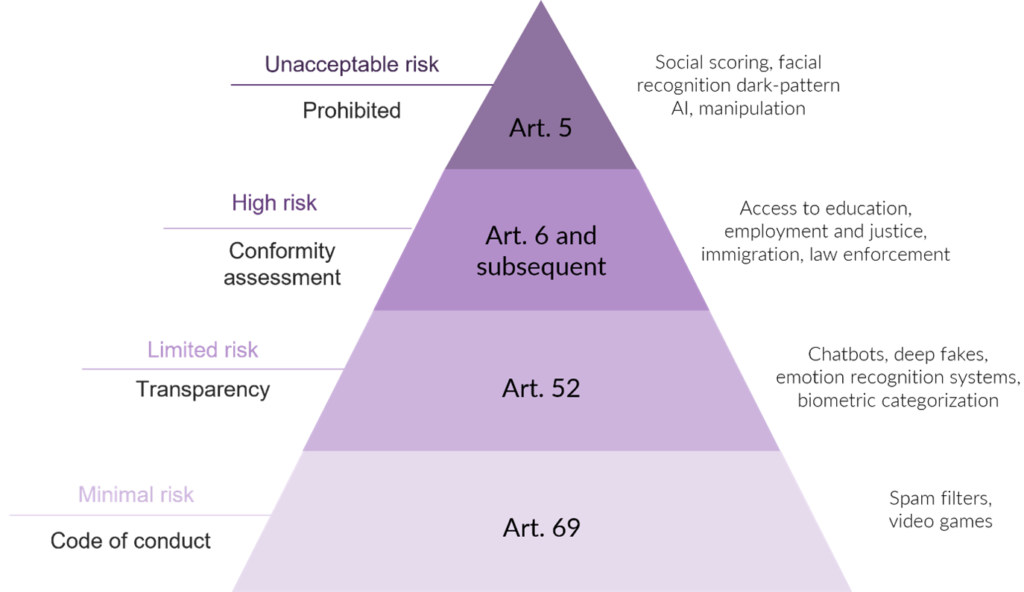

These recommendations (soft law) from international bodies are complemented by voluntary principles at the national level, such as those of Australia, Canada, the United States, Switzerland and others. However, some jurisdictions are moving beyond mere suggestions and taking a regulatory approach (hard law). The European Union is pioneering an enforceable law with its proposed Artificial Intelligence Act, which is currently being discussed in the European Parliament. The proposed law assigns applications of AI to four risk categories:

Figure 1. The EU’s proposed Artificial Intelligence Act details risk categories and prohibits certain applications of the technology; it also proposes significant fines for non-compliance

If the Act is passed as proposed, AI system non-compliance would be subject to administrative fines of up to EUR 20 million or, if the offender is a company, up to 4 percent of its total worldwide annual turnover for the preceding financial year, whichever is higher. Incorrect information supplied to designated supervisory bodies could result in administrative fines of up to EUR 10 million or up to 2 percent of the company’s total worldwide annual turnover.

Individual countries like Italy have already prohibited one of the best-known AI applications, ChatGPT. Citing a data breach as well as concerns about factually incorrect responses and the absence of age restrictions for users, at the end of March 2023 the Italian data protection regulator ordered a temporary stop to the processing of Italian users’ data. France, Germany, and Ireland are considering a similar move, while the UK has announced it plans to regulate AI.

At ECOFACT, we closely monitor ESG developments. We can help you understand these developments and align with evolving stakeholder and regulatory expectations. If you need support in adjusting your company’s approach to AI or human rights, do not hesitate to reach out.

[1] An algorithm is a procedure used for solving a problem or performing a computation. Algorithms act as an exact list of instructions that conduct specified actions step by step in either hardware- or software-based routines. Algorithms are widely used throughout all areas of IT.

[2] Use cases collected from the following sources: BSR: Why Every Business Needs to Think about Responsible AI (April 2023), Nvidia: State of AI I Financial Services (February 2023), Health Tech Magazine (December 2022), Inside Higher Ed: How AI is Shaping the Future of Higher Ed (March 2023), Deloitte: Surveillance and Predictive Policing Through AI (2021), CBC News: While courts use fax machines, law firms are using AI to tailor arguments for judges (March 2023).

All posts

All posts Contact

Contact